[Tile-serving] [openstreetmap/osm2pgsql] OOM killed while building index on table planet_osm_rels (#834)

Josip Rodin

notifications at github.com

Tue Mar 27 07:22:30 UTC 2018

Hi,

I think I exhibited symptoms similar to https://github.com/openstreetmap/osm2pgsql/issues/351

The invocation was through Nominatim's setup.php:

```

time /srv/nominatim/Nominatim/build/utils/setup.php --osm-file /opt/import/europe-latest.osm.pbf --all --osm2pgsql-cache $((25*1024)) --threads 4

[...]

osm2pgsql version 0.93.0-dev (64 bit id space)

Using projection SRS 4326 (Latlong)

NOTICE: table "place" does not exist, skipping

Allocating memory for dense node cache

Allocating dense node cache in one big chunk

Allocating memory for sparse node cache

Sharing dense sparse

Node-cache: cache=25600MB, maxblocks=409600*65536, allocation method=11

Mid: loading persistent node cache from /opt/flatnode/europe.flatnode

Mid: pgsql, cache=25600

Setting up table: planet_osm_nodes

Setting up table: planet_osm_ways

Setting up table: planet_osm_rels

Reading in file: /opt/import/europe-latest.osm.pbf

Using PBF parser.

[...]

Node stats: total(2062884192), max(5503870021) in 3082s

Way stats: total(251002077), max(572904737) in 8024s

Relation stats: total(3916024), max(8147194) in 3547s

Setting up table: planet_osm_nodes

Setting up table: planet_osm_ways

Setting up table: planet_osm_rels

Going over pending ways...

0 ways are pending

Using 1 helper-processes

^MFinished processing 0 ways in 0 s

Going over pending relations...

0 relations are pending

Using 1 helper-processes

^MFinished processing 0 relations in 0 s

Stopping table: planet_osm_nodes

Stopped table: planet_osm_nodes in 0s

Stopping table: planet_osm_ways

Stopped table: planet_osm_ways in 0s

Stopping table: planet_osm_rels

Building index on table: planet_osm_rels

Killed

ERROR: Error executing external command: /srv/nominatim/Nominatim-3.1.0/build/osm2pgsql/osm2pgsql --flat-nodes /opt/flatnode/europe.flatnode -lsc -O gazetteer --hstore --number-processes 1 -C 25600 -P 5432 -d nominatim /opt/import/europe-latest.osm.pbf

Error executing external command: /srv/nominatim/Nominatim-3.1.0/build/osm2pgsql/osm2pgsql --flat-nodes /opt/flatnode/europe.flatnode -lsc -O gazetteer --hstore --number-processes 1 -C 25600 -P 5432 -d nominatim /opt/import/europe-latest.osm.pbf

real 247m3.825s

user 322m23.432s

sys 7m0.540s

```

This is the Linux kernel log of the crashed run:

```

[263891.316993] postgres invoked oom-killer: gfp_mask=0x24201ca, order=0, oom_score_adj=0

[263891.317001] postgres cpuset=/ mems_allowed=0

[263891.317047] CPU: 5 PID: 14798 Comm: postgres Not tainted 4.4.0-116-generic #140-Ubuntu

[263891.317050] Hardware name: OpenStack Foundation OpenStack Nova, BIOS 2:1.10.2-58953eb7 04/01/2014

[263891.317053] 0000000000000286 123cdd746b262241 ffff880701b4f9d8 ffffffff813ffc13

[263891.317057] ffff880701b4fb90 ffff88077102e200 ffff880701b4fa48 ffffffff8121012e

[263891.317061] ffff88076fae1c00 ffff88076d659150 ffff88076d658e00 ffff880701b4fb90

[263891.317065] Call Trace:

[263891.317116] [<ffffffff813ffc13>] dump_stack+0x63/0x90

[263891.317137] [<ffffffff8121012e>] dump_header+0x5a/0x1c5

[263891.317153] [<ffffffff811968f2>] oom_kill_process+0x202/0x3c0

[263891.317157] [<ffffffff81196d19>] out_of_memory+0x219/0x460

[263891.317167] [<ffffffff8119cd45>] __alloc_pages_slowpath.constprop.88+0x965/0xb00

[263891.317172] [<ffffffff8119d168>] __alloc_pages_nodemask+0x288/0x2a0

[263891.317179] [<ffffffff811e6ccc>] alloc_pages_current+0x8c/0x110

[263891.317183] [<ffffffff81192e0b>] __page_cache_alloc+0xab/0xc0

[263891.317187] [<ffffffff81195390>] filemap_fault+0x150/0x400

[263891.317208] [<ffffffff812a81d6>] ext4_filemap_fault+0x36/0x50

[263891.317214] [<ffffffff811c2216>] __do_fault+0x56/0xf0

[263891.317218] [<ffffffff811c5d75>] handle_mm_fault+0xfa5/0x1820

[263891.317233] [<ffffffff810bd0dc>] ? set_next_entity+0x9c/0xb0

[263891.317249] [<ffffffff8106c747>] __do_page_fault+0x197/0x400

[263891.317254] [<ffffffff8106ca17>] trace_do_page_fault+0x37/0xe0

[263891.317261] [<ffffffff81064fb9>] do_async_page_fault+0x19/0x70

[263891.317286] [<ffffffff81851a08>] async_page_fault+0x28/0x30

[263891.317318] Mem-Info:

[263891.317326] active_anon:7358988 inactive_anon:22263 isolated_anon:0

active_file:15 inactive_file:0 isolated_file:0

unevictable:913 dirty:0 writeback:0 unstable:0

slab_reclaimable:56535 slab_unreclaimable:7638

mapped:547407 shmem:548861 pagetables:24885 bounce:0

free:48362 free_pcp:732 free_cma:0

[263891.317333] Node 0 DMA free:15908kB min:32kB low:40kB high:48kB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15992kB managed:15908kB mlocked:0kB dirty:0kB writeback:0kB mapped:0kB shmem:0kB slab_reclaimable:0kB slab_unreclaimable:0kB kernel_stack:0kB pagetables:0kB unstable:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? yes

[263891.317343] lowmem_reserve[]: 0 2963 29419 29419 29419

[263891.317348] Node 0 DMA32 free:114524kB min:6800kB low:8500kB high:10200kB active_anon:2887820kB inactive_anon:10828kB active_file:40kB inactive_file:0kB unevictable:568kB isolated(anon):0kB isolated(file):0kB present:3129200kB managed:3048436kB mlocked:568kB dirty:0kB writeback:0kB mapped:226864kB shmem:227984kB slab_reclaimable:21872kB slab_unreclaimable:2716kB kernel_stack:144kB pagetables:8112kB unstable:0kB bounce:0kB free_pcp:868kB local_pcp:112kB free_cma:0kB writeback_tmp:0kB pages_scanned:1532 all_unreclaimable? yes

[263891.317358] lowmem_reserve[]: 0 0 26456 26456 26456

[263891.317367] Node 0 Normal free:63016kB min:60744kB low:75928kB high:91116kB active_anon:26548132kB inactive_anon:78224kB active_file:20kB inactive_file:0kB unevictable:3084kB isolated(anon):0kB isolated(file):0kB present:27574272kB managed:27091108kB mlocked:3084kB dirty:0kB writeback:0kB mapped:1962764kB shmem:1967460kB slab_reclaimable:204268kB slab_unreclaimable:27836kB kernel_stack:3696kB pagetables:91428kB unstable:0kB bounce:0kB free_pcp:2060kB local_pcp:288kB free_cma:0kB writeback_tmp:0kB pages_scanned:260 all_unreclaimable? yes

[263891.317398] lowmem_reserve[]: 0 0 0 0 0

[263891.317403] Node 0 DMA: 1*4kB (U) 0*8kB 0*16kB 1*32kB (U) 2*64kB (U) 1*128kB (U) 1*256kB (U) 0*512kB 1*1024kB (U) 1*2048kB (M) 3*4096kB (M) = 15908kB

[263891.317448] Node 0 DMA32: 1904*4kB (UMEH) 1458*8kB (UMEH) 895*16kB (UMEH) 730*32kB (MEH) 369*64kB (ME) 132*128kB (ME) 43*256kB (ME) 9*512kB (ME) 1*1024kB (M) 0*2048kB 0*4096kB = 114112kB

[263891.317514] Node 0 Normal: 5952*4kB (UMEH) 4461*8kB (UMEH) 215*16kB (UE) 0*32kB 0*64kB 0*128kB 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB = 62936kB

[263891.317532] Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=1048576kB

[263891.317538] Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB

[263891.317540] 549521 total pagecache pages

[263891.317549] 0 pages in swap cache

[263891.317551] Swap cache stats: add 0, delete 0, find 0/0

[263891.317553] Free swap = 0kB

[263891.317555] Total swap = 0kB

[263891.317557] 7679866 pages RAM

[263891.317558] 0 pages HighMem/MovableOnly

[263891.317560] 141003 pages reserved

[263891.317561] 0 pages cma reserved

[263891.317563] 0 pages hwpoisoned

[263891.317564] [ pid ] uid tgid total_vm rss nr_ptes nr_pmds swapents oom_score_adj name

[263891.317575] [ 694] 0 694 13015 5172 30 3 0 0 systemd-journal

[263891.317580] [ 730] 0 730 25742 135 17 3 0 0 lvmetad

[263891.317584] [ 1195] 0 1195 4030 533 12 3 0 0 dhclient

[263891.317588] [ 1309] 0 1309 1305 29 8 3 0 0 iscsid

[263891.317592] [ 1310] 0 1310 1430 877 9 3 0 -17 iscsid

[263891.317595] [ 1314] 0 1314 77160 337 20 4 0 0 lxcfs

[263891.317599] [ 1322] 0 1322 6932 258 18 3 0 0 cron

[263891.317603] [ 1325] 107 1325 10753 381 25 3 0 -900 dbus-daemon

[263891.317607] [ 1330] 104 1330 64098 1076 27 3 0 0 rsyslogd

[263891.317610] [ 1332] 0 1332 68622 590 36 3 0 0 accounts-daemon

[263891.317614] [ 1335] 0 1335 6511 383 18 3 0 0 atd

[263891.317618] [ 1342] 0 1342 1099 302 8 3 0 0 acpid

[263891.317621] [ 1344] 0 1344 150692 3683 46 6 0 -900 snapd

[263891.317625] [ 1351] 0 1351 7136 269 18 3 0 0 systemd-logind

[263891.317633] [ 1353] 0 1353 16377 576 37 3 0 -1000 sshd

[263891.317637] [ 1380] 0 1380 3343 34 11 3 0 0 mdadm

[263891.317640] [ 1384] 0 1384 69294 590 39 3 0 0 polkitd

[263891.317644] [ 1431] 0 1431 4893 412 14 3 0 0 irqbalance

[263891.317648] [ 1441] 0 1441 3618 405 12 3 0 0 agetty

[263891.317651] [ 1442] 0 1442 3664 329 12 3 0 0 agetty

[263891.317655] [ 5681] 100 5681 25081 303 20 3 0 0 systemd-timesyn

[263891.317662] [12231] 0 12231 10556 384 22 3 0 -1000 systemd-udevd

[263891.317667] [13785] 0 13785 73091 3130 45 3 0 0 fail2ban-server

[263891.317670] [19845] 0 19845 88852 4545 132 4 0 0 apache2

[263891.317690] [24491] 33 24491 88983 2059 124 3 0 0 apache2

[263891.317694] [24492] 33 24492 88870 2259 122 3 0 0 apache2

[263891.317698] [24493] 33 24493 88870 2259 122 3 0 0 apache2

[263891.317702] [24495] 33 24495 88870 2259 122 3 0 0 apache2

[263891.317705] [24496] 33 24496 88983 2310 124 3 0 0 apache2

[263891.317709] [25184] 33 25184 88870 2259 122 3 0 0 apache2

[263891.317713] [25527] 0 25527 23199 234 49 4 0 0 sshd

[263891.317716] [25529] 1001 25529 11319 351 26 3 0 0 systemd

[263891.317720] [25531] 1001 25531 52146 466 36 4 0 0 (sd-pam)

[263891.317724] [25593] 1001 25593 23234 275 48 4 0 0 sshd

[263891.317729] [25596] 1001 25596 10849 929 26 3 0 0 zsh

[263891.317733] [25896] 0 25896 13195 2750 30 3 0 0 munin-node

[263891.317737] [27479] 113 27479 580196 18552 99 4 0 -900 postgres

[263891.317741] [27481] 113 27481 580875 536815 1110 5 0 0 postgres

[263891.317745] [27482] 113 27482 580196 492026 1100 5 0 0 postgres

[263891.317749] [27483] 113 27483 580196 4430 66 4 0 0 postgres

[263891.317752] [27484] 113 27484 580298 625 65 4 0 0 postgres

[263891.317755] [27485] 113 27485 37136 644 55 3 0 0 postgres

[263891.317759] [ 9255] 1001 9255 6103 569 16 3 0 0 screen

[263891.317763] [ 9256] 1001 9256 10349 839 26 3 0 0 zsh

[263891.317767] [14032] 0 14032 12447 537 30 3 0 0 sudo

[263891.317770] [14033] 1004 14033 4782 415 15 3 0 0 script

[263891.317774] [14034] 1004 14034 4571 549 15 3 0 0 bash

[263891.317778] [14695] 1004 14695 60232 1999 108 4 0 0 setup.php

[263891.317781] [14700] 113 14700 657125 4395 188 4 0 0 postgres

[263891.317785] [14793] 1004 14793 1126 142 8 3 0 0 sh

[263891.317789] [14794] 1004 14794 17553080 6646846 18231 70 0 0 osm2pgsql

[263891.317792] [14798] 113 14798 740513 264531 1428 6 0 0 postgres

[263891.317797] [21177] 1001 21177 6035 436 17 3 0 0 screen

[263891.317800] [27179] 113 27179 582022 2864 83 5 0 0 postgres

[263891.317804] [27180] 113 27180 582022 2751 83 5 0 0 postgres

[263891.317807] [27181] 113 27181 582022 2867 82 5 0 0 postgres

[263891.317811] [27182] 113 27182 581986 1755 81 5 0 0 postgres

[263891.317815] [27185] 113 27185 608463 8222 136 5 0 0 postgres

[263891.317818] [27272] 113 27272 582551 6633 104 5 0 0 postgres

[263891.317822] [27291] 0 27291 13195 2616 30 3 0 0 /usr/sbin/munin

[263891.317826] [27292] 0 27292 4542 130 14 3 0 0 if_ens3

[263891.317829] [27293] 113 27293 580436 1569 70 4 0 0 postgres

[263891.317833] [27294] 0 27294 4543 98 13 3 0 0 if_ens3

[263891.317836] Out of memory: Kill process 14794 (osm2pgsql) score 884 or sacrifice child

[263891.322761] Killed process 14794 (osm2pgsql) total-vm:70212320kB, anon-rss:26585740kB, file-rss:1644kB

```

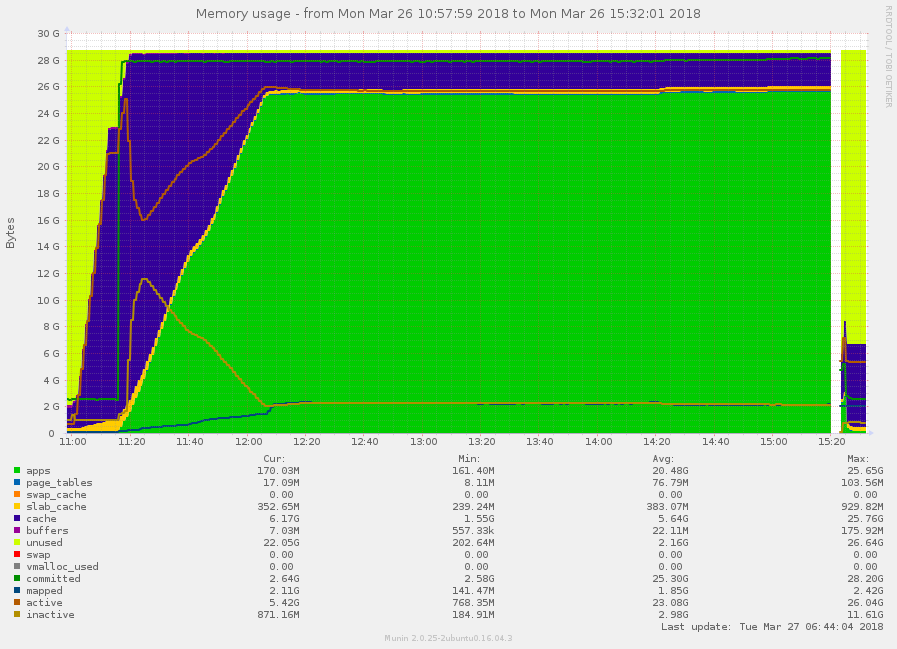

This is the memory graph of the crashed run:

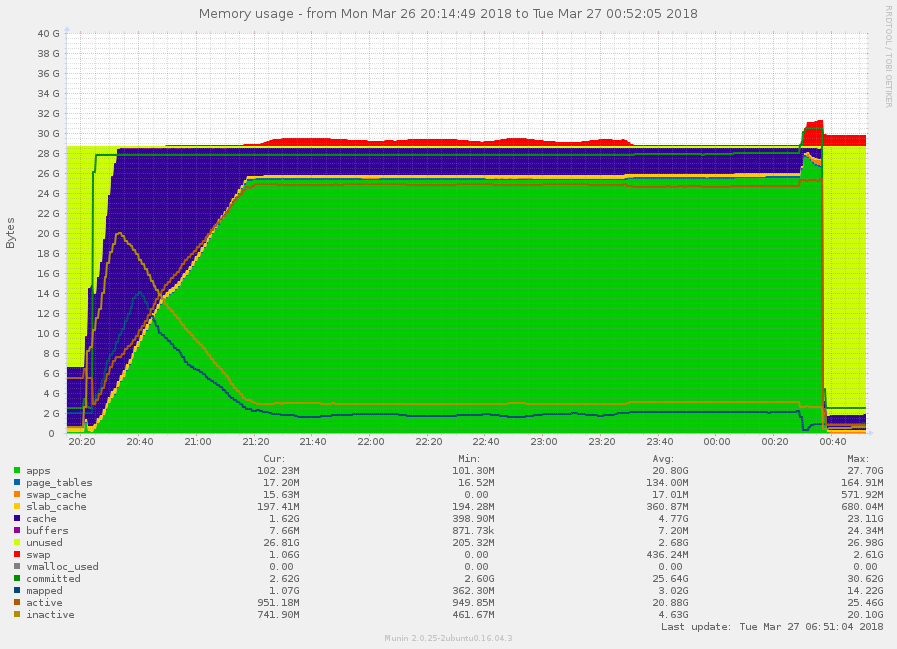

Afterwards I added 8 GB swap and it went through like this:

```

time /srv/nominatim/Nominatim/build/utils/setup.php --osm-file /opt/import/europe-latest.osm.pbf --osm2pgsql-cache $((25*1024)) --threads 4 --import-data

[...]

Node stats: total(2062884192), max(5503870021) in 3305s

Way stats: total(251002077), max(572904737) in 7773s

Relation stats: total(3916024), max(8147194) in 3629s

Setting up table: planet_osm_nodes

Setting up table: planet_osm_ways

Setting up table: planet_osm_rels

Going over pending ways...

0 ways are pending

Using 1 helper-processes

^MFinished processing 0 ways in 0 s

Going over pending relations...

0 relations are pending

Using 1 helper-processes

^MFinished processing 0 relations in 0 s

Stopping table: planet_osm_nodes

Stopped table: planet_osm_nodes in 0s

Stopping table: planet_osm_ways

Stopped table: planet_osm_ways in 0s

Stopping table: planet_osm_rels

Building index on table: planet_osm_rels

Stopped table: planet_osm_rels in 483s

node cache: stored: 2002553530(97.08%), storage efficiency: 59.68% (dense blocks: 213590, sparse nodes: 802857374), hit rate: 97.44%

Osm2pgsql took 15194s overall

Summary of warnings:

2018-03-27 00:37:36 == Setup finished.

real 253m14.902s

user 323m58.592s

sys 10m4.312s

```

And the memory graph of that good run was:

So it appears it had been in that memory spike in the final five minutes of a four-hour process, what a waste.

The core issue is that the user thinks they're telling the program to consume 25 GB of memory, yet the program takes a substantial amount more, and because the use case is such that it typically pushes the hardware to its limits, the software spirals out of control, and there's no recovery (the long-running process has to be repeated from the beginning, the docs I found all discourage it any attempt not to do that).

In hindsight, the machine had about 20% leeway which may or may not have been reasonable. I got that estimate from talking to Paul Norman on the OSM geocoding mailing list - cf. the thread at https://lists.openstreetmap.org/pipermail/geocoding/2018-March/001925.html

Either way, these hardware requirements and implications need to be better documented if we don't want users to keep reproducing essentially the same issues over and over (that's my impression from googling this).

Please document this. TIA.

--

You are receiving this because you are subscribed to this thread.

Reply to this email directly or view it on GitHub:

https://github.com/openstreetmap/osm2pgsql/issues/834

-------------- next part --------------

An HTML attachment was scrubbed...

URL: <http://lists.openstreetmap.org/pipermail/tile-serving/attachments/20180327/599ec152/attachment-0001.html>

More information about the Tile-serving

mailing list